This Week’s Finds in Mathematical Physics (Week 294)

Posted by John Baez

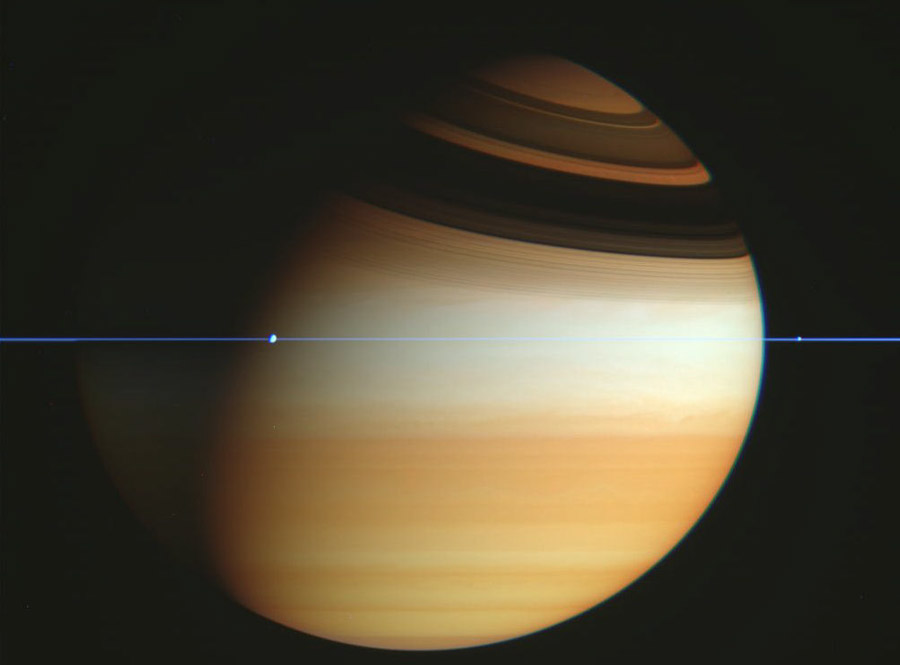

In week294 of This Week’s Finds, hear an account of Gelfand’s famous math seminar in Moscow. Read what Jan Willems thinks about control theory and bond graphs. Learn the proof of Tellegen’s theorem. Meet some categories where the morphisms are circuits, and learn why a category object in Vect is just a 2-term chain complex. Finally, gaze at Saturn’s rings edge-on:

Re: This Week’s Finds in Mathematical Physics (Week 294)

Is there a reason, by the way, why you need to only add meshes until things are contractible? It seems like an artificial requirement, and considering, say, circuit design for etched circuitboards with vias, not entirely realistic.

Why not just add all meshes, and allow for non-trivial second degree homology and cohomology? It won’t hurt the exactness in degree 1…