I am late to this discussion. Maybe I can still make a contribution.

An important point might be hidden in the observation that the atomic structure of a metal beam is a different discrete model of that beam than the finite-element approximation which a computer will apply in order to compute properties of the beam. Still, both asymptote to the same continuum description.

So it seems key points raised by Eric and Mike could be summarized as:

- the object of interest (for instance: nature) might be disctete

- but still a continuum description may be useful, since it is more universal.

There are many discrete versions of the same continuum model, and they all “sit inside” the continuum model in a well-defined way.

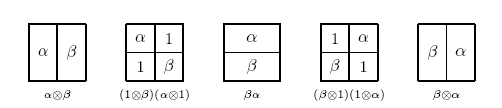

This is reminiscent for instance of the coalgebraic description of the real numbers that we talked about on other threads recently: the real interval is in a precise sense the universal solution to having an ordered set with distinct lowest and smallest element and a map from the set to the result of gluing this set along its endpoints to itself: .

There are many “discrete” solutions to this problem: take for instance . Then and is multiplication by 2.

The universal solution to finding such , however, is the standard interval .

It may well be that in some concrete application that we are looking at, we are dealing really with . But since is not all that different from , many answers we are looking for concerning questions about may essentially be just answers about , which unifies in it all the .

By Adámek’s theorem terminal coalgebras can be computed by categorical limits. While I am a bit confused about if and how this works in the above case, this fact might point to a relation between the discussion about finite/discrete versus infinits/continuous in the broader context of issues like ind-objects and accessible categories:

if we are finitists/discretists we might strictly speaking be interested just in a category of finite objects, in some sense, but for the pursposes of understanding it may be useful to consider bigger categories that may be accessed by .

terminology conventions

Twice now I have read an entry at the nLab and found myself disagreeing with the author’s choice of terminology. Of course, I proceeded to add a comment/query about it. The resulting discussion at subcategory seems to be converging, while a discussion at Grothendieck topology has yet to begin (of course, I just posted my inflammatory remarks there a few minutes ago).

This raises an interesting question: in cases of conflicting terminology, how should the nLab decide which to adopt? Wikipedia mandates a neutral point of view for all its articles, but the nLab is already intentionally taking sides on some controversial issues, as its About page says: “we do not hesitate to provide non-traditional perspectives… if we feel that these are the right perspectives, definitions and explanations from a modern unified higher categorical perspective.”

There may be little to no disagreement among contributors to the nLab about what the “right” modern unified higher categorical perspective is (although such disagreements are probably not out of the question), but there may be much more disagreement over terminology. Ideally, we could all discuss things and come to an agreement, but some new contributor in the future might arrive with strongly held differing views. Should we try to avoid making choices at all? We should certainly alert the reader of the existence of differing terminologies, but it seems that it might be desirable for the nLab as a whole to use consistent terminology. Any thoughts?