Random Permutations (Part 14)

Posted by John Baez

I want to go back over something from Part 11, but in a more systematic and self-contained way.

Namely, I want to prove a wonderful known fact about random permutations, the Cycle Length Lemma, using a bit of category theory. The idea here is that the number of -cycles in a random permutation of things is a random variable. Then comes a surprise: in the limit as , this random variable approaches a Poisson distribution with mean . And even better, for different choices of these random variables become independent in the limit.

I’m stating these facts roughly now, to not get bogged down. But I’ll state them precisely, prove them, and categorify them. That is, I’ll state equations involving random variables — but I’ll prove that these equations come from equivalences of groupoids!

First I’ll state the Cycle Length Lemma, which summarizes a lot of interesting facts about random permutations. Then I’ll state and prove a categorified version of the Cycle Length Lemma, which asserts an equivalence of groupoids. Then I’ll derive the original version of the lemma from this categorified version by taking the cardinalities of these groupoids. The categorified version contains more information, so it’s not just a trick for proving the original lemma.

What do groupoids have to do with random permutations? You’ll see, but it’s an example of ‘principle of indifference’, especially in its modern guise, called the ‘principle of transformation groups’: the idea that outcomes related by a symmetry should have the same probability. This sets up a connection between groupoids and probability theory — and as we’ll see, we can “go down” from groupoids to probabilities using the theory of groupoid cardinalities.

The Cycle Length Lemma

In the theory of random permutations, we treat the symmetric group as a probability measure space where each element has the same measure, namely . Functions then become random variables, and we can study their expected values:

An important example is the function

that counts, for any permutation , its number of cycles of length , also called -cycles. A well-known but striking fact about random permutations is that whenever , the expected number of -cycles is :

For example, a random permutation of any finite set has, on average, one fixed point!

Another striking fact is that whenever and , so that it’s possible for a permutation to have both a -cycle and a -cycle, the random variables and are uncorrelated in the following sense:

You might at first think that having lots of -cycles for some large would tend to inhibit the presence of -cycles for some other large value of , but that’s not true unless , when it suddenly becomes impossible to have both a -cycle and a -cycle!

These two facts are special cases of the Cycle Length Lemma. To state this lemma in full generality, recall that the number of ordered -tuples of distinct elements of an -element set is the falling power

It follows that the function

counts, for any permutation in , its ordered -tuples of distinct -cycles. We can also replace the word ‘distinct’ here by ‘disjoint’, without changing the meaning, since distinct cycles must be disjoint.

The two striking facts mentioned above generalize as follows:

1) First, whenever , so that it is possible for a permutation in to have distinct -cycles, then

If you know about the moments of a Poisson distribution here’s a nice equivalent way to state this equation: when , the th moment of the random variable equals that of a Poisson distribution with mean .

2) Second, the random variables are better and better approximated by independent Poisson distributions. To state this precisely we need a bit of notation. Let denote an -tuple of natural numbers, and let

If , it is possible for a permutation to have a collection of distinct cycles, with cycles of length 1, cycles of length 2, and so on up to cycles of length . If , this is impossible. In the former case, where , we always have

Taken together, 1) and 2) are equivalent to the Cycle Length Lemma, which may be stated in a unified way as follows:

Cycle Length Lemma. Suppose . Then

This appears, for example, in Ford’s course notes on random permutations and the statistical properties of prime numbers [Lemma 1.1, F]. The most famous special case is when . Apparently this goes back to Cauchy, but I don’t know where he proved it. I believe he would have phrased it in terms of counting permutations, not probabilities.

I won’t get into details of precisely the sense in which random variables approach independent Poisson distributions. For that, see Arratia and Tavaré [AT].

The Categorified Cycle Length Lemma

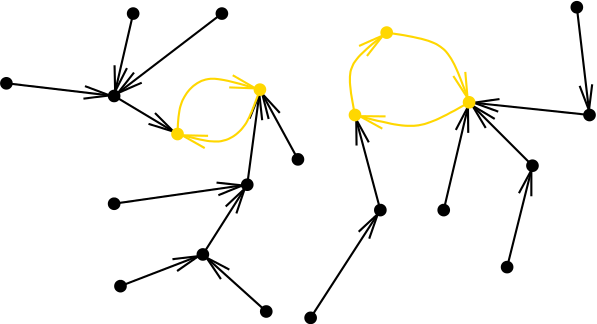

To categorify the Cycle Length Lemma, the key is to treat a permutation as an extra structure that we can put on a set, and then consider the groupoid of -element sets equipped with this extra structure:

Definition. Let be the groupoid in which

- an object is an -element set equipped with a permutation

and

- a morphism from to is a bijection that is permutation-preserving in the following sense:

We’ll need this strange fact below: if then is the empty groupoid (that is, the groupoid with no objects and no morphisms).

More importantly, we’ll need a fancier groupoid where a set is equipped with a permutation together with a list of distinct cycles of specified lengths. For any and any -tuple of natural numbers , recall that we have defined

Definition. Let be the groupoid of -element sets equipped with a permutation that is in turn equipped with a choice of an ordered -tuple of distinct -cycles, an ordered -tuple of distinct -cycles, and so on up to an ordered -tuple of distinct -cycles. A morphism in this groupoid is a bijection that is permutation-preserving and also preserves the ordered tuples of distinct cycles.

Note that if , no choice of disjoint cycles with the specified property exists, so is the empty groupoid.

Finally, we need a bit of standard notation. For any group we write for its delooping: that is, the groupoid that has one object and .

The Categorified Cycle Length Lemma. For any we have

Proof. Both sides are empty groupoids when , so assume . A groupoid is equivalent to any full subcategory of that groupoid containing at least one object from each isomorphism class. So, fix an -element set and a subset with elements. Partition into subsets where has cardinality , , and . Every object of is isomorphic to the chosen set equipped with some permutation that has each subset as a -cycle. Thus is equivalent to its full subcategory containing only objects of this form.

An object of this form consists of an arbitrary permutation and a cyclic permutation for each as above. Consider a second object of this form, say equipped with cyclic permutations . Then a morphism from the first object to the second consists of two pieces of data. First, a bijection

such that

Second, for each as above, bijections

such that

Since has elements, while and are cyclic permutations of -element sets, it follows that is equivalent to

The case where is especially pretty, since then our chosen cycles completely fill up our -element set and we have

Groupoid Cardinality

The cardinality of finite sets has a natural extension to finite groupoids, and this turns out to be the key to extracting results on random permutations from category theory. Let’s briefly recall the idea of ‘groupoid cardinality’ [BD,BHW]. Any finite groupoid is equivalent to a coproduct of finitely many one-object groupoids, which are deloopings of finite groups :

and then the cardinality of is defined to be

This concept of groupoid cardinality has various nice properties. For example it’s additive:

and multiplicative:

and invariant under equivalence of groupoids:

But none of these three properties require that we define as the sum of the reciprocals of the cardinalities : any other power of these cardinalities would work just as well. What makes the reciprocal cardinalities special is that if is a finite group acting on a set , we have

where the groupoid is the weak quotient or homotopy quotient of by , also called the action groupoid. This is the groupoid with elements of as objects and one morphism from to for each with , with composition of morphisms coming from multiplication in .

The groupoid of -element sets equipped with permutation, , has a nice description in terms of weak quotients:

Lemma. For all we have an equivalence of groupoids

where the group acts on the underlying set of by conjugation.

Proof. We use the fact that is equivalent to any full subcategory of containing at least one object from each isomorphism class. For we can get such a subcategory by fixing an -element set, say , and taking only objects of the form , i.e. . A morphism from to is then a permutation such that

But this subcategory is precisely . ▮

Corollary. For all we have

Proof. We have . ▮

It should now be clear why we can prove results on random permutations using the groupoid : this groupoid is equivalent to , a groupoid with one object for each permutation , and with each object contributing to the groupoid cardinality.

Now let us use this idea to derive the original Cycle Length Lemma from the categorified version.

Cycle Length Lemma. Suppose . Then

Proof. We know that

So, to prove the Cycle Length Lemma it suffices to show three things:

and

The last of these is immediate from the definition of groupoid cardinality. The second follows from the Corollary above, together with the fact that is the empty groupoid when . Thus we are left needing to show that

We prove this by computing the cardinality of a groupoid equivalent to . This groupoid is of the form

where is a set on which acts. As a result we have

and to finish the proof we will need to show

What is the set , and how does act on this set? An element of is a permutation equipped with an ordered -tuple of distinct -cycles, an ordered -tuple of distinct -cycles, and so on up to an ordered -tuple of distinct -cycles. Any element acts on in a natural way, by conjugating the permutation to obtain a new permutation, and mapping the chosen cycles of to the corresponding cycles of this new permutation .

Recalling the definition of the groupoid , it is clear that any element of gives an object of , and any object is isomorphic to one of this form. Furthermore any permutation gives a morphism between such objects, all morphisms between such objects are of this form, and composition of these morphisms is just multiplication in . It follows that

To finish the proof, note that

is times the number of ways of choosing a permutation and equipping it with an ordered -tuple of distinct -cycles, an ordered -tuple of distinct -cycles, and so on. This is the same as . ▮

References

[AT] Richard Arratia and Simon Tavaré, The cycle structure of random permutations, The Annals of Probability (1992), 1567–1591.

[BD] John C. Baez and James Dolan, From finite sets to Feynman diagrams, in Mathematics Unlimited—2001 and Beyond, vol. 1, eds. Björn Engquist and Wilfried Schmid, Springer, Berlin, 2001, pp. 29–50.

[BHW] John C. Baez, Alexander E. Hoffnung and Christopher D. Walker, Higher-dimensional algebra VII: groupoidification, Theory and Applications of Categories 24 (2010), 489–553.

[F] Kevin Ford, Anatomy of Integers and Random Permutations—Course Lecture Notes.

Re: Random Permutations (Part 14)

Wow, this is lovely. It may be a silly question, but could the cycle length lemma imply relations in some kind of Burnside ring?