Linear Operators Done Right

Posted by Tom Leinster

A conversation prompted by Simon’s last post reminded me of an analogy that’s too excellent to be buried in a comments thread. It must be very well-known, but I’ll go ahead and describe it anyway.

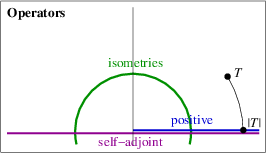

The analogy is between complex numbers and linear operators on an inner product space. Its best feature is that it makes important properties of complex numbers correspond to important properties of operators:

The title of this post refers to Sheldon Axler’s beautiful book Linear Algebra Done Right, which I’ve written about before. Most of what I’ll say can be found in Chapter 7. It’s one of those texts that feels like a piece of category theory even though it’s not actually about categories.

Today, all vector spaces are over and finite-dimensional. Most (all?) of what I’ll say can be done in more sophisticated functional-analytic settings, but I’ll stick to this most basic of situations.

Fix a vector space equipped with an inner product. By an operator on , I mean a linear map .

Here’s how the analogy goes.

Complex numbers are like operators This is the basis of everything that follows.

There’s not much substance to this statement yet. For now, let’s just observe that both the complex numbers and the operators on form rings. I’ll write for the ring of operators on , following the usual categorical custom. (“End” stands for “endomorphisms”.)

The two rings and don’t seem very similar. Unlike , the ring isn’t commutative and usually has nontrivial zero-divisors. (Indeed, as long as , there is some with but .) Perhaps surprisingly, these differences don’t prevent the development of this useful analogy.

In some loose sense, we can pass back and forth between and . In one direction, starting with a complex number , we get the operator . In elementary texts, this operator is often written as , but I’ll almost always write it as just .

In the opposite direction, starting with an operator on , we get not just a single complex number but a collection of them — namely, its eigenvalues.

Complex conjugates are like adjoints Every complex number has a complex conjugate . Taking complex conjugates defines a self-inverse automorphism of the ring .

Every linear map of inner product spaces has an adjoint , characterized by the equation . In particular, every operator on has an adjoint , also an operator on .

It’s almost true that taking adjoints defines a self-inverse automorphism of . The only obstruction is that taking adjoints reverses the order of composition: . So actually, taking adjoints defines a pair of mutually inverse ring isomorphisms

where is the ring with its order of multiplication reversed.

What about those back-and-forth passages between complex numbers and operators?

First, start with a complex number ; then the adjoint of the operator is . That is, . This is why I’m writing for the complex conjugate of , rather than the more common .

Second, start with an operator . Then the eigenvalues of are exactly the conjugates of the eigenvalues of . Why? Because taking the adjoint defines an isomorphism of rings, so is invertible iff is.

Real numbers are like self-adjoint operators A complex number is real if and only if . By definition, an operator is self-adjoint if and only if .

Again, let’s look at the passages back and forth between and . First, let . As long as is nontrivial, the operator is self-adjoint iff is real.

Second, if is a self-adjoint operator then all its eigenvalues are real. The converse isn’t true: an operator can have all real eigenvalues without being self-adjoint. We’ll come back to that.

Any even half-serious endeavour involving self-adjoint operators makes use of the theorem that classifies them, the spectral theorem. Loosely put, this states that every self-adjoint operator is an orthogonal sum of self-adjoint operators of the most simple kind: scalar multiplication by a real number.

Precisely: given any self-adjoint operator , there is a unique orthogonal decomposition such that for each , the restriction of to is multiplication by . Of course, all but finitely many of these subspaces are trivial, the nontrivial ones are those for which is an eigenvalue, and is the eigenspace .

Nonnegative real numbers are like positive operators For a complex number , the following are equivalent:

- (1) is nonnegative, i.e. real and

- (2) for some complex

- (3) () for some real

- (4) () for some nonnegative

- (5) () for a unique nonnegative .

I’ll follow custom and say that an operator is positive if it is self-adjoint and each eigenvalue is . (Other names are “positive semidefinite” and “nonnegative definite”. As we were recently discussing, the terminology around positive/nonnegative is a bit of a mess.) Note that by definition, “positive” includes “self-adjoint”. This is just like the convention that when we call a complex number “nonnegative”, we tacitly include the condition “real”.

For an operator on , the following are equivalent:

- (1) is positive, i.e. self-adjoint and each eigenvalue is

- (1.5) is self-adjoint and for all

- (2) for some inner product space and linear map

- (2.5) for some operator on

- (3) () for some self-adjoint operator

- (4) () for some positive operator

- (5) () for a unique positive operator .

The implications are all either trivial or easy. The remaining implication, , follows from the spectral theorem, using of the result on nonnegativity of numbers.

In particular, given , the operator is positive iff the number is nonnegative (assuming that is nontrivial). And given an operator , if is positive then each eigenvalue of is nonnegative (but not conversely).

The modulus of a complex number is like… the modulus of an operator? What is the modulus of a complex number? Let’s answer this carefully, using the theorem above on nonnegativity of complex numbers. Let . By the theorem, is nonnegative, so by the theorem again, there is a unique nonnegative such that (). This is, of course, , the modulus of .

What is the analogue for operators? Let’s use the theorem above on positivity of operators. Let . By the theorem, is positive, so by the theorem again, there is a unique positive such that (). I’ll call the modulus of and write it as . I don’t know whether the term “modulus” is standard here, and I’m pretty sure the notation isn’t — it’s risky, given the potential for confusion with a norm. But I’ll use it anyway, to emphasize the analogy.

Complex numbers of unit modulus are like isometries A complex number has unit modulus if and only if , if and only if . An operator is an isometry if and only if , if and only if (if and only if preserves inner products, if and only if preserves distances). Isometries are more often called unitary operators, but I find the term “isometry” more vivid.

Now that we have a definition of “modulus” for operators, we can ask: which operators are literally “of unit modulus”? In other words, which operators satisfy ? Here is the identity operator. Certainly is positive, so if and only if , if and only if is an isometry. So the different parts of the analogy hang together nicely.

Once again, let’s go back and forth between complex numbers and operators. Given , the operator is an isometry iff the number is of unit modulus (again, assuming that is nontrivial). Given an operator , if is an isometry then all its eigenvalues are of unit modulus. Again, the converse is false, and again, we’ll come back to that.

Polar decomposition of complex numbers and operators Any complex number can be expressed as a product

where is of unit modulus and is nonnegative. Moreover, this is uniquely determined as , and if then is uniquely determined by too. (If then many choices of are possible.)

Similarly, it’s a theorem that any operator can be expressed as a composite

where is an isometry and is positive. Moreover, this is uniquely determined as , and if is invertible then is uniquely determined by too. (If is not invertible then many choices of are possible.)

In the case where is just multiplication by a scalar , the second theorem (polar decomposition of operators) reduces to the first (polar decomposition of complex numbers).

If you prefer, you can decompose an operator in the other order too: an isometry followed by a positive operator. To see this, decompose as ; then . But is an isometry, since the adjoint of an isometry is again an isometry — just as the conjugate of a complex number of unit modulus is again of unit modulus.

And that’s the analogy.

Normal operators, and the fraying of the analogy

Like all analogies, this one eventually frays. Right at the start, we noted a big difference between complex numbers and operators: multiplying complex numbers is commutative, but composing operators isn’t. And another one: there are no nonzero nilpotent complex numbers, but there are nonzero nilpotent operators.

I’ll explain the trouble this causes by talking about operators that satisfy the equation . In a fit of no inspiration, someone once called such operators normal, and the name stuck.

Now, all complex numbers are “normal”, in the sense that , but not all operators are normal — for example, any nonzero nilpotent is “abnormal”. So this is a wrinkle in the analogy. You might conclude from this that the correct analogue for the complex numbers is not the set of all operators, but just the normal ones. This idea has in its favour that all self-adjoint operators and isometries (“real numbers” and “numbers of unit modulus”) are normal — because an operator commutes with both itself and its inverse.

However, the normal operators don’t form a ring, at least, not under the usual operations. The class of normal operators is closed under taking polynomials in one variable, but not under composition. Indeed, the polar decomposition theorem implies that by composing two normal operators, we can obtain any operator we like.

The normal operators are nevertheless a useful class, giving further depth to the analogy. I clearly remember the first time I saw the definition of normal operator: I was overwhelmed by the feeling that it was an awful hack. “Someone,” I thought to myself, “simply wants a definition that includes both self-adjoint operators and isometries, and they’ve written down the first thing that came into their head.” Oh young, foolish self; I was wrong. Here’s why:

Normal operators are exactly the right context for the spectral theorem.

Recall that for an operator , the spectral theorem says that is the orthogonal sum of the eigenspaces of . This statement isn’t true for all operators. Earlier on, I stated that it was true for all self-adjoint operators, and that in that case, all the eigenvalues are real. But there are certainly non-self-adjoint operators such that is the orthogonal sum of the eigenspaces — multiplication by any non-real scalar is an example.

So which operators is the spectral theorem true for? Exactly the normal ones. In other words:

Spectral theorem Let be an operator on . Then is the orthogonal sum of the eigenspaces of if and only if is normal.

This says that multiplication by a scalar is a normal operator, that the class of normal operators is closed under orthogonal sums, and that combining these two constructions generates all possible normal operators. ‘Only if’ is easy; it’s ‘if’ that takes work. You can find a proof in Linear Algebra Done Right.

We can read off two corollaries, both supporting the claim that “complex numbers are like normal operators” is a better analogy than “complex numbers are like operators”.

Corollary Let be a normal operator. Then (i) is self-adjoint if and only if all eigenvalues of are real, and (ii) is an isometry if and only if all eigenvalues of are of unit modulus.

We saw earlier that without the normality, the “only if” parts are true but the “if” parts fail.

Fundamental theorem of algebra for normal operators Let be a nonconstant polynomial over , and let be a normal operator. Then there exists a normal operator such that .

For both proofs, all we have to do is observe that the class of operators for which the result holds contains all operators of the form “multiply by a scalar” and is closed under orthogonal sums. That’s all there is to it!

Re: Linear Operators Done Right

Two quick comments: first, the complex numbers and the algebra of operators on a Hilbert space are both *-algebras and that seems like the main point here. Second, presumably you already know this, but normality is precisely the condition that the *-subalgebra generated by an element is commutative, from which a version of the spectral theorem follows by Gelfand-Naimark.