Entropy, Diversity and Cardinality (Part 2)

Posted by David Corfield

Guest post by Tom Leinster

What happened last time?

Ecologically, I explained some ways to measure the diversity of an ecosystem — but only taking into account abundance (how many of each species there are), not similarity (how much the various species have in common). Mathematically, I explained how to assign a number to a finite probability space, measuring how uniform the distribution is.

What’s happening now?

Ecologically, we’ll see how to measure diversity in a way that takes into account both abundance and similarity. So, for instance, an ecosystem consisting of three species of eel in equal numbers will count as less diverse than one consisting of equal numbers of eels, eagles and earwigs. Mathematically, we’ll see how to assign a number to any finite ‘probability metric space’ (a set equipped with both a probability distribution and a metric).

By thinking about diversity, we’ll be led to the concept of the cardinality of a metric space. (Some ecologists got there years ago.) And by building on progress made at this blog, we’ll solve some open problems posed in the ecology literature.

Previously

In the first post, we modelled an ecosystem as a ‘finite probability space’ — that is, a finite sequence of non-negative reals summing to , with representing the abundance of the th species. The post was all about ways of measuring such systems. We found three infinite families of measures, each indexed by a real parameter ; true to the title, they were the ‘-entropy’, ‘-diversity’ and ‘-cardinality’.

In a sense it doesn’t matter which family you use, since each determines the others. For instance, if you tell me what the -entropy of your ecosystem is, I can tell you what its -diversity and -cardinality are. But sometimes the context makes one more convenient than the others.

I’ll quickly summarize the definitions. There were lots of formulas last time, but in fact everything follows from this one alone: for each , the ‘surprise function’ is defined by Here I’m assuming that . I’ll come back to later.

The -diversity of a finite probability space is the expected surprise, It takes its minimum value, , when the distribution is concentrated at one point (that is, some is ), and its maximum value, , when the distribution is uniform ().

The -cardinality is defined in terms of the -diversity in such a way that its minimum value is (‘essentially just one species’) and its maximum is (‘all species fully represented’). This pretty much forces us to define the -cardinality of by

Finally, the -entropy is just the logarithm of the -cardinality:

To handle , take limits: , , etc. With a bit of care, you can handle similarly.

I’ve said all that in as formula-free a way as I can. But from what I’ve said, you can easily derive explicit formulas for diversity, cardinality and entropy, and work out the especially interesting cases . For ease of reference, here it all is.

Let me draw your attention to two important properties of cardinality:

- Multiplicativity for all and probability spaces and .

- Invariant max and min For most probability spaces , the cardinality changes with . Let’s call invariant if, on the contrary, is independent of .

Fix a cardinality for our underlying set. Then for all , the maximum value of is , and for all , this is attained at the uniform distribution . In particular, the uniform distribution is invariant. Similarly, for all , the minimum value of is , and for all , this is attained at any of the form . In particular, is invariant.

In fact, the invariant probability spaces can be neatly classified:

Theorem Let . Let be a nonempty subset of , and define by Then is invariant. Moreover, every invariant probability space arises in this way.

The two cases just mentioned are where has or elements.

The cardinality of metric spaces: a lightning introduction

We’ll use the concept of the ‘cardinality’ of a finite metric space (which is not the same thing as the cardinality of its set of points!) Here I’ll tell you everything you need to know about it in order to follow this post. If you want more, try these slides; skip the first few if you don’t know any category theory. If you want more still, try the discussion here at the Café.

Here goes. Let be a finite metric space with points ; write for the distance from to . (We allow .) Let be the matrix with . A weighting on is a column vector such that The cardinality of the metric space , written , is the sum of the entries of . This is independent of the choice of weighting , so cardinality is defined as long as at least one weighting exists.

Examples If is empty then . If has just one point then . If has two points, distance apart, then So when we have ; think of this as saying ‘there is effectively only one point’. As the points move further apart, increases, until when we have ; think of this as saying ‘there are two completely distinguished points’. More generally, if is an -point space with whenever , then . You can think of cardinality as the ‘effective number of points’.

Usually is invertible. In that case there is a unique weighting, and is the sum of all entries of . There are, however, examples of finite metric spaces for which no weighting exists; then the cardinality is undefined.

Notes for the learned 1. Previously I’ve used rather than . The difference amounts to rescaling the metric by a factor of , so is fairly harmless. In the present context the reason for inserting the seems not to be very relevant, so I’ve dropped it.

2. I’m writing , rather than the customary , to emphasize that it’s the cardinality of as a metric space. It’s not, in general, the same as the cardinality of the underlying set, or the cardinality (Euler characteristic) of the underlying topological space. (Moral: forgetful functors don’t preserve cardinality.) This will be important later.

3. Lawvere has argued that the symmetry axiom should be dropped from the definition of metric space. I’m assuming throughout this post that our metric spaces are symmetric, but I think everything can be done without the symmetry axiom.

Outline

We now refine our model of an ecosystem by taking similarity between species into account. A (finite) probability metric space is a finite probability space together with a metric on . I’ll often denote a probability metric space by .

The distances are to be interpreted as a measure of the dissimilarity between species. There’s a biological decision to be made as to how exactly to quantify it. It could be percentage genetic difference, or difference in toe length, or how far up the evolutionary tree you have to go before you find a common ancestor, ….. Anyway, I’ll assume that the decision has been made.

Note that in the definition of probability metric space, there’s no axiom saying that the probability and metric structure have to be compatible in any way. This reflects ecological reality: just because your community contains three-toed sloths, there’s no guarantee that you’ll also find two-toed sloths.

Example In 1875, Alfred Russel Wallace described a journey through a tropical forest:

If the traveller notices a particular species and wishes to find more like it, he may often turn his eyes in vain in every direction. Trees of varied forms, dimensions, and colours are around him, but he rarely sees any one of them repeated. Time after time he goes towards a tree which looks like the one he seeks, but a closer examination proves it to be distinct. He may at length, perhaps, meet with a second specimen half a mile off, or may fail altogether, till on another occasion he stumbles on one by accident.

(Tropical Nature and Other Essays, quoted in the paper of Patil and Taillie cited in Part 1.) Wallace’s description tells us three things about the ecosystem: it contains many species, most of them are rare, and there are many close resemblances between them. In our terminology, is large, most s are small, and many s are small.

An important player in this drama is the quantity associated to each point of a probability metric space. You can interpret it in a couple of ways:

- ‘How much stuff there is near ’. Think of the probability metric space as a metric space with a certain amount of ‘stuff’ piled up at each point . (It’s like our ClustrMap.) Then think of as a sum of s weighted by the s. The point itself contributes the full measure of its stuff; a nearby point contributes most of its measure ; a far-away point contributes almost nothing.

- ‘Mean closeness to ’. While measures distance, measures closeness, on a scale of to : for points close together it’s nearly , and for points far apart it’s close to . So is the mean closeness to point .

When we’re thinking ecologically, we might say similarity instead of closeness, so that identical species have similarity and utterly different species have similarity . Then is the expected similarity between an individual of species and an individual chosen at random. So even if species itself is rare, a great abundance of species similar to will ensure that is high.

I’ll call the density at . The second interpretation makes it clear that density is always in .

The metric aspect of a probability metric space is encapsulated in the matrix mentioned earlier. (You could call the similarity matrix.) The probabilistic aspect is encapsulated in the -dimensional column vector . Their product encapsulates density: it is the column vector whose th entry is the density at .

In what sense are we generalizing Part 1? Well, we’ve replaced sets by metric spaces, so we need to know how metric spaces generalize sets. This works as follows: given a set , the discrete metric space on is the metric space in which the points are the points of and the distance between any two distinct points is . This construction is left adjoint to the forgetful functor from (metric spaces and distance-decreasing maps) to sets.

If is discrete then the similarity matrix is the identity, so , i.e. for all . Ecologically, discreteness means that distinct species are regarded as completely different: we’re incapable of seeing the resemblance between butterflies and moths.

The plan We’re going to define, for each , the -diversity, -cardinality and -entropy of any probability metric space. We’ll do this by taking the definitions for probability spaces without metrics (Part 1) and judiciously replacing certain occurrences of ‘’ by ‘’.

Then things get interesting. For a fixed set, it was easy to find the probability distribution that maximized the cardinality (or equivalently, the diversity or entropy). For a fixed metric space, it’s not so obvious… but the answer has something to do with the cardinality of the metric space.

We’ll then see how to apply what we know about cardinality of metric spaces to some problems in the ecological literature, and finally, we’ll see how the cardinality of metric spaces was first discovered in ecology.

Surprise, revisited

Imagine you’re taking samples from an ecosystem. So far you’ve found one species of toad, two species of frog, and three species of newt. How surprised would you be if the next individual you found was a frog of a different species? Not very surprised, because although it would be the first time you’d seen that particular species in your ecosystem, you’d already seen lots of similar species. You’d have been much more surprised if, for instance, you’d found a penguin.

Imagine you’re studying the vocabulary used by a particular novelist. You’re tediously going through his work, listing all the words he’s used. So far your data indicates that he writes rather formally, favouring long, Latinate words and avoiding slang. How surprised would you be if the next word you found was ‘erubescent’? Not very surprised, even if it was the first time you’d ever come across the word, because you’d already seen lots of similar words in his books. You’d have been much more surprised if the next word had been ‘bummer’.

Both scenarios show that your surprise at an event depends not only on the (perceived) probability of the event itself, but also on the probability of similar events. We’ll interpret this mathematically as follows: instead of our surprise at finding species being a function of the probability, , it will be a function of the density .

Recall from Part 1 that a surprise function is a decreasing function with . Any surprise function gives rise to a diversity measure on finite probability metric spaces: the diversity of is the expected surprise, This is just the same as the formula in Part 1 except that has been changed to . In the degenerate case of a discrete metric space, where we’re blind to the similarities between species, it’s no change at all.

With almost no further thought, we can now extend the concepts of -diversity, -cardinality and -entropy from probability spaces to probability metric spaces.

Diversity

Let . The -diversity of a probability metric space is In the case of a discrete metric space, this reduces to what we called -diversity in Part 1.

I’ll run through the usual examples, . It seems that the easiest to understand is , so I’ll start with that.

Example: We have where is the transpose of the column vector and is the matrix given by . How can we understand this? Well, is what I called the similarity between species and , so might be called the difference. It’s an increasing function of , measured on a scale of to . Choose two individuals at random: then is the expected difference between them.

This quantity is known as Rao’s quadratic diversity index, or Rao’s quadratic entropy, named after the eminent statistician C.R. Rao. In its favour are the straightforward probabilistic interpretation and the mathematically useful fact that it’s a quadratic form.

In the degenerate case of a discrete metric space, is Simpson’s diversity index, described in Part 1.

Example: We have In the discrete case, is Shannon entropy. In the general case, it would be the cross entropy of and , except that cross entropy is usually only defined when both arguments are probability distributions, and this isn’t the case for our second argument, .

Example: Even -diversity is nontrivial: In the discrete case, is , the ‘species richness’. In general, the -diversity is highest when, for many , the density at is not much greater than the probability at — that is, there are not many individuals of species similar to the th. This fits sensibly with our intuitive notion of diversity.

The family of diversity measures was introduced here:

Carlo Ricotta, Laszlo Szeidl, Towards a unifying approach to diversity measures: Bridging the gap between the Shannon entropy and Rao’s quadratic index, Theoretical Population Biology 70 (2006), 237–243

…almost. Actually, there’s a subtle difference.

Aside: what Ricotta and Szeidl actually do Near the beginning of their paper, Ricotta and Szeidl make a convention: that in their metric spaces of species, all distances will be . This may seem innocuous, if mathematically unnatural, but turns out to be rather significant.

Motivated by ideas about conflict between species, they define, for each , a diversity measure for probability metric spaces. At first glance the formula seems different from ours. However, it becomes the same if we rescale the range of allowed distances from to via the order-preserving bijection We’ve already seen the formula : earlier, we wrote and called it the difference between species and . In other words, the ‘distance’ in Ricotta and Szeidl’s metric space is what I’d call the ‘difference’. It’s easy to show that if the distances define a metric on then so do the differences (but not conversely). The presence of two metrics on the same set can be slightly confusing.

Cardinality and entropy

In Part 1 we applied an invertible transformation to rescale -diversity into something more mathematically convenient, -cardinality. We’ll now apply the same rescaling to obtain a definition of -cardinality in our more sophisticated, metric context. And, as before, we’ll call its logarithm ‘-entropy’.

So, let and let be a probability metric space. Its -cardinality is Its -entropy is The -cardinality is always a real number ; the -diversity and -entropy are always real numbers .

Example: The -cardinality of a probability metric space is .

Example: As in the discrete case, the -cardinality has a special property not shared by the other -cardinalities: that the cardinality of a convex combination of probability metric spaces is determined by and the cardinalities of and . I’ll leave the details to you, curious reader.

Example: This is the reciprocal of a quadratic form:

Example: The limit exists for every probability metric space ; its value, the -cardinality of , is

All the -cardinalities are multiplicative, in the following sense. Any two probability metric spaces , have a ‘product’ . The underlying set is . The probability of event is . The metric is the ‘ metric’: if and then the distance from to is . (Viewing metric spaces as enriched categories, this is the usual tensor product.) The multiplicativity result is that for all , and , Equivalently, is log-like: .

Maximizing diversity

When a species becomes extinct, biodiversity is reduced. Less drastically, diversity decreases when a species becomes rare. Conversely, a highly diverse ecosystem is one containing a wide variety of species, all well-represented, with no one species dominant.

Now suppose you’re in charge of a small ecosystem. The set of species is fixed, but you can control the abundance of each species. What should you do to maximize diversity?

Before we seek a mathematical answer, let’s try to get a feel for it. If there are only two species, the answer is immediate: you want them evenly balanced, –. What if there are three? In the absence of further information, you’d want % of each. But let’s suppose that two of them are rather similar — say, Persian wheat and Polish wheat — and one is relatively different — say, poppies. Then the –– split would mean that your system was dominated two-to-one by wheat, and that doesn’t seem like maximal diversity. On the other hand, if you regarded the two types of wheat as essentially the same, you’d want to split it ––. And if you acknowledged that the two wheats were different, but not very different, you might choose a compromise such as ––.

Here’s the mathematical question. Fix a finite metric space .

Question Let . What probability distribution on maximizes the -cardinality, and what is the maximum value?

It doesn’t matter whether the question is posed in terms of -entropy, -diversity or -cardinality, since the three are related by invertible, order-preserving transformations. (For more on maximizing entropy, see David’s post.) And, incidentally, the analogous question for minimum values is easy: the minimum value is , attained when some is .

Rough Answer Often, the -cardinality is maximized by taking the probability distribution , for any weighting on , and the maximum value is the cardinality of the metric space .

Thus — finally, as promised! — we see how the concept of the cardinality of a metric space comes naturally from ecological considerations.

Let me say immediately what’s rough about the Rough Answer.

- It’s certainly not true in complete generality — in fact, it doesn’t even make sense in complete generality, since not every finite metric space has a well-defined cardinality.

- It’s not always true even when does have well-defined cardinality, since there are some metric spaces for which , and is the minimum value.

- Furthermore, there are metric spaces for which there is a unique weighting , but for some values of . Then the ‘probability distribution’ in the Rough Answer is not a probability distribution at all.

- And even when is defined and , even when has a unique weighting with for all , I don’t know that the Rough Answer is necessarily correct.

So, in what sense is the Rough Answer an answer?

I’ll give a series of partial justifications. Immediately, the Rough Answer is correct when is discrete: then is (the cardinality of the underlying set), the unique weighting is , and the probability distribution mentioned is the uniform distribution.

Notation Since we’ve fixed a metric space and we’re interested in a varying probability distribution on , from now on I’ll write the -cardinality of the probability metric space defined by and as .

Fact 1 Let be a weighting on and write . Then , for all .

(In order for to be a probability distribution, we need all the weights to be non-negative; but even if they’re not, we can still define and the result still holds.)

This is a simple calculation. In particular, the probability distribution is invariant: its -cardinality is the same for all . This is a very special property:

Fact 2 Let be a nonempty subset of and a weighting on such that for all ; define a probability distribution on by Then is invariant. Moreover, every invariant probability distribution on arises in this way.

Given the special case of discrete metric spaces (the Theorem at the end of ‘Previously’), it seems reasonable to view the distribution on a metric space as the analogue of the uniform distribution on a set.

Fact 3 Let be a weighting on . Then for each , the function has a stationary point (critical point) at .

Again, this holds for all . I do not know under precisely what circumstances this stationary point is a local maximum, or a global maximum, or whether every stationary point is of this form. The best result I know is this:

Fact 4 Suppose that the similarity matrix of is positive-definite, and that . Let be the unique weighting on , and suppose that all the weights are non-negative. Then the Rough Answer is correct: the maximum value of is , attained when .

For example, suppose that has only three points, as in the wheat and poppies example. Then is always positive-definite and the weights are always non-negative, so the -diversity is maximized by growing the three species in proportion to their weights — no matter what value of we choose. Suppose, for instance, that the two species of wheat are distance apart, and both are distance from the poppy species. Then a few calculations with a matrix tell us that diversity is maximized by growing % Persian wheat, % Polish wheat and % poppies. This fits with our earlier intuitions.

Further partial results along these lines are in

S. Pavoine, S. Ollier, D. Pontier, Measuring diversity from dissimilarities with Rao’s quadratic entropy: Are any dissimilarities suitable? Theoretical Population Biology 67 (2005), 231–239.

(Again, there doesn’t seem to be a free copy online. Apparently it’s just not the done thing in mathematical ecology. Ecologists of the world, rise up! Throw off your chains!)

They also do some case studies with real data, calculating, for instance, how to maximize the 2-diversity of a system of 18 breeds of cattle. They use the concept of the cardinality of a metric space (not by that name). They weren’t the first ecologists to do so… but I’ll save that story until the end.

Does mixing increase diversity?

We tend to think of high-entropy situations as those in which a kind of blandness has been attained through everything being mixed together, like white noise, or snow on a TV screen, or all the pots of paint combined to make brown. In other words, we expect mixing to increase entropy. We probably expect it to increase diversity too.

This principle is often formalized as ‘concavity’. Suppose you own two neighbouring fields, each containing a hundred farmyard animals. You open a gate between the fields, effectively making one big field containing two hundred animals. If one of the original fields contained just sheep and the other contained just cows, you would have combined two ecosystems of diversity to make one ecosystem of diversity . More generally, concavity says that the diversity of the combined ecosystem is at least as great as the mean of the two original diversities.

To be precise, fix a finite metric space and let be a function assigning to each probability distribution on a ‘diversity’ . Then is concave if for all probability distributions and on and all , This is seen as a desirable property of diversity measures.

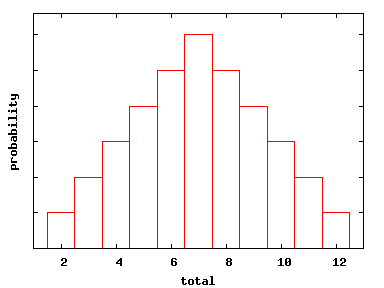

Example If the metric space is discrete then the -diversity measure is concave for every . (This is in the paper by Patil and Taillie cited in Part 1.)

Question For a general metric space , is -diversity always concave?

Ricotta and Szeidl, who introduced these diversity measures, were especially interested in the -diversity (Rao’s quadratic index , discussed above). They prove that it’s concave when there are at most species, and state that when , ‘the problem has not been solved’. We can now solve it, at least for , by adapting a related counterexample of Tao.

Example Let be the -point metric space whose difference matrix is the symmetric matrix where and . (Recall that .) Let Then So for this space , Rao’s diversity index is not concave. And we can get a similar example with any given number of points, by adjoining new points at distance from everything else.

Ricotta and Szeidl also proved some partial results on the concavity of the diversity measures for values of other than . But again, is not always concave:

Fact 5 For all , there exists a 6-point metric space whose -diversity measure is not concave.

This can be proved by a construction similar to the one for , but taking different values of and according to the value of .

How biologists discovered the cardinality of metric spaces

When Christina Cobbold suggested the possibility of a link between the cardinality of metric spaces and diversity measures, I read the paper she gave me, followed up some references, and eventually found — to my astonishment and delight — that the cardinality of a metric space appeared explicitly in the ecology literature:

Andrew Solow, Stephen Polasky, Measuring biological diversity, Environmental and Ecological Statistics 1 (1994), 95–107.

I don’t know how Solow and Polasky would describe themselves, but probably not as category theorists: Solow is director of the Marine Policy Center at Woods Hole Oceanographic Institution, and Polasky is a professor of ecological and environmental economics.

They came to the definition of the cardinality of a metric space by a route completely different from anything discussed so far. They don’t mention entropy. In fact, they almost completely ignore the matter of the abundance of the species present, considering only their similarity. Here’s an outline of the relevant part of their paper.

Preservation of biodiversity is important, but money for it is scarce. So perhaps it’s sensible, or at least realistic, to focus our conservation efforts on those ecosystems most likely to benefit human beings. As they put it,

The discussion so far has essentially assumed that diversity is desirable and has focused on constructing a measure with reasonable properties. A different view is that it is not so much diversity per se that is valuable, but the benefits that diversity provides.

So they embark on a statistical analysis of the likely benefits of high biodiversity, using, for the sake of concreteness, the idea that every species has a certain probability of providing a cure for a disease. Their species form a finite metric space, and similar species are assumed to have similar probabilities of providing a cure.

With this model set up, they compute a lower bound for the probability that a cure exists somewhere in the ecosystem. The bound is an increasing function of a quantity that they pithily describe as the ‘effective number of species’… and that’s exactly the cardinality of the metric space!

Despite the fact that the cardinality appears only as a term in a lower bound, they realize that it’s an interesting quantity and discuss it for a page or so, making some of the same conjectures that I made again fifteen years later: that cardinality is ‘monotone in distance’ (if you increase the distances in a metric space, you increase the cardinality) and that, for a space with points, the cardinality always lies between and . Thanks to the help of Simon Willerton and Terence Tao, we now know that both conjectures are false.

Summary

In Part 1 we discussed how to measure a probability space. In Part 2 we discussed how to measure a probability metric space. In both we defined, for each , the -diversity of a space (the ‘expected surprise’), the -cardinality (the ‘effective number of points’), and the -entropy (its logarithm).

In Part 1, the uniform distribution played a special role. Given a finite set , the uniform distribution is essentially the only distribution on with the same -cardinality for all . (‘Essentially’ refers to the fact that you can also take the uniform distribution on a subset of , then extend by .) Moreover, for every , it is the distribution with the maximum -cardinality — namely, the cardinality of the set .

In Part 2, where the set is equipped with a metric, there is in general no distribution with all these properties. However, there’s something that comes close. When has a non-negative weighting, we have the ‘weight distribution’ on , in which each point of has probability proportional to its weight. This is essentially the only distribution on with the same -cardinality for all . Moreover, under certain further hypotheses, it is also the distribution with the maximum -cardinality — namely, the cardinality of the metric space .

I don’t see the full picture clearly. I don’t know the solution to the general problem of finding the distribution with the maximum cardinality (or entropy, or diversity) on a given metric space. And I don’t know how to incorporate into my intuition the fact that there are some finite metric spaces with negative cardinality, and others with cardinality greater than the number of points.

I also don’t know how one would generalize from distributions on finite metric spaces to distributions on, say, compact or complete metric spaces — but that’s something for another day.

Re: Entropy, Diversity and Cardinality (Part 2)

Wow!

(Apologies for a content-free comment, but you know it’s going to take a while for the rest of us to digest all that…)