Relative Entropy

Posted by John Baez

You may recall how Tom Leinster, Tobias Fritz and I cooked up a neat category-theoretic characterization of entropy in a long conversation here on this blog. Now Tobias and I have a sequel giving a category-theoretic characterization of relative entropy. But since some people might be put off by the phrase ‘category-theoretic characterization’, it’s called:

I’ve written about this paper before, on my other blog:

- Relative Entropy (Part 1): how various structures important in probability theory arise naturally when you do linear algebra using only the nonnegative real numbers.

- Relative Entropy (Part 2): a category related to statistical inference, and how relative entropy defines a functor on this category.

- Relative Entropy (Part 3): statement of our main theorem, which characterizes relative entropy up to a constant multiple as the only functor with a few nice properties.

But now the paper is actually done! Let me give a compressed version of the whole story here… with sophisticated digressions buried in some parenthetical remarks that you’re free to skip if you want.

Our gives a new characterization of the concept of relative entropy, also known as ‘relative information’, ‘information gain’ or—by people who like to use jargon to make their work seem obscure—‘Kullback-Leibler divergence’.

Here’s the basic idea. Whenever you have two probability distributions and on the same finite set you can define the entropy of relative to :

Here we set

equal to when unless is also zero, in which case we set it equal to 0. Relative entropy thus takes values in

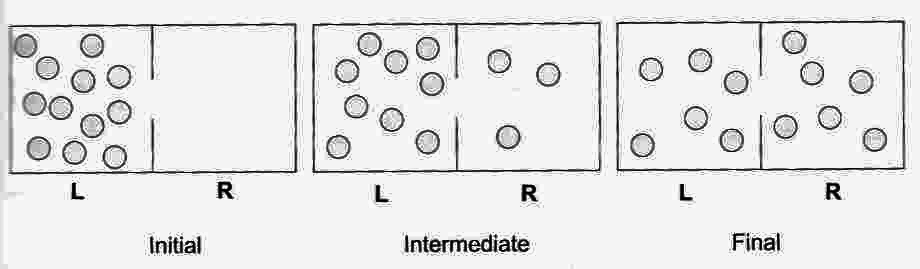

Intuitively speaking, measures how surprised you’d be if you thought a situation was described by a probability distribution … but then someone came along and said no, it’s really .

Or if ‘surprise’ sounds too subjective, it’s the expected amount of information gained when you discover the probability distribution is really when you’d thought it was

Tobias and I wanted to use category theory to say what’s so great about relative entropy. We did it using a category where:

- an object consists of a finite set and a probability distribution on that set;

- a morphism consists of a measure-preserving function from to together with a probability distribution on for each element , with the property that unless .

If the raw math seems hard to swallow, perhaps some honey-coated words will help it go down. I think of an object of as a system with some finite set of states together with a probability distribution on its states. This lets me think of a morphism

in a nice way. First, there’s a measurement process , a function from the set of states of some system being measured to the set of states of some measurement apparatus. The condition that be measure-preserving says the probability that the apparatus winds up in any state is the sum of the probabilities of all states of leading to that outcome:

Second, there’s a hypothesis . This is a guess about the probability that the system being measured is in the state given any measurement outcome The guess is the number .

Now, suppose we have any morphism

in From this we get two probability distributions on . First, we have the probability distribution given by

This is our best guess about the the probability that the system is in any given state, given our hypothesis and the probability distribution of measurement results. Second, we have the ‘true’ probability distribution .

In fact, this way of assigning relative entropies to morphisms defines a functor

where we use to denote the category with one object, the numbers as morphisms, and addition as composition. More precisely, if

is any morphism in we define

where is defined as in equation . This tells us how surprised we are when we learn the true probability distribution , if our measurement results were distributed according to and our hypothesis was .

The fact that is a functor is nontrivial and rather interesting! It says that given any composable pair of measurement processes:

the relative entropy of their composite is the sum of the relative entropies of the two parts:

We prove that is a functor. However, we go further: we characterize relative entropy by saying that up to a constant multiple, is the unique functor from to obeying three reasonable conditions.

Lower semicontinuity

The first condition is that is lower semicontinuous. The set of probability distibutions on a finite set naturally has the topology of an -simplex when has elements. The set has an obvious topology where it’s homeomorphic to a closed interval. However, with these topologies, the relative entropy does not define a continuous function

The problem is that

and is when and — but it’s when

So, it turns out that is only lower semicontinuous, meaning that if are sequences of probability distributions on with and then

We give the set of morphisms in its most obvious topology, and show that with this topology, maps morphisms to morphisms in a lower semicontinuous way.

(Lower semicontinuity may seem like an annoying property. But there’s a way to redeem it. There’s a sneaky topology on such that a function taking values in is lower semicontinuous (in the lim inf sense above) if and only if it’s continuous with respect to this sneaky topology!

Using this idea, we can make and into topological categories — that is, categories internal to Top — in such a way that lower semicontinuity simply says

is a continuous functor.

A bit confusingly, this sneaky topology on is called the upper topology. I’ve fallen in love with the upper topology on . Why?

Well, is a very nice rig, or ‘ring without negatives’. Addition is defined in the obvious way, and multiplication is defined in the almost-obvious way, except that

Even this is actually obvious if you remember that it’s required by the definition of a rig. But if you try to put the ordinary closed interval topology on , you’ll see multiplication is not continuous, because is infinite when but then it suddenly jumps down to zero when hits zero. However, multiplication is continuous if we give the upper topology! Then becomes a topological rig.)

Convex linearity

The second condition is that is convex linear. We describe how to take convex linear combinations of morphisms in and then the functor maps any convex linear combination of morphisms in to the corresponding convex linear combination of numbers in

Intuitively, this means that if we take a coin with probability of landing heads up, and flip it to decide whether to perform one measurement process or another, the expected information gained is times the expected information gain of the first process plus times the expected information gain of the second process.

(Operadically, the point is that both and are algebras of an operad P whose operations are convex linear combinations. The -ary operations in P are just probability distributions on an -element set. In other words, they’re points in the -simplex.

So, saying that is convex linear means that

is a map of P-algebras. But we avoid discussing this in our paper because , being a category, is just a ‘weak’ P-algebra, and we decided this would be too much for our poor little readers.

For those who like fine nuances: P is a topological operad, and and are algebras of this in the topological category TopCat. As I mentioned, is a ‘weak’ P-algebra, meaning the laws for convex linear combinations hold only up to coherent natural isomorphism. is strict… but to get convex linear combinations like to behave continuously, we have to give the upper topology!)

Vanishing on a subcategory

The third condition is that vanishes on morphisms where the hypothesis is optimal. By this, we mean that equation gives a probability distribution equal to the ‘true’ one, .

That makes a lot of sense conceptually: we don’t gain any information upon learning the truth about a situation if we already knew the truth!

(But the subcategory of where we keep all the objects but only these ‘optimal’ morphisms also has a nice category-theoretic significance. Tom Leinster called it FP in this post:

That’s because it’s the ‘free P-algebra on an internal P-algebra’, where P is the operad I mentioned. I won’t explain what this means here, because Tom did it! Suffice it to say that it’s a shockingly abstract piece of operad theory that nonetheless manages to capture the concept of entropy very neatly. But that’s plain old entropy, not relative entropy.)

The result

Here, then, is our main result:

Theorem. Any lower semicontinuous, convex-linear functor

that vanishes on every morphism with an optimal hypothesis must equal some constant times the relative entropy. In other words, there exists some constant such that

for any any morphism in

The proof

The proof is surprisingly hard. Or maybe we’re just surprisingly bad at proving things. But the interesting thing is this: the proof is swift and effective in the ‘generic’ case — the case where the support of the probability measures involved is the whole set they’re living on, and the constant is finite.

It takes some more work to handle the case where the probability measures have smaller support.

But the really hard work starts when we handle the case that, in the end, has . Then the proof becomes more like analysis than what you normally expect in category theory. We slowly corner the result, blocking off all avenues of escape. Then we close in, grab its neck, and strangle it, crushing its larynx ever tighter, as it loses the will to fight back and finally expires… still twitching.

You’ve got to read the proof to understand what I mean.

Re: Relative Entropy

You might include the name “cross-entropy” among your list – I saw it used a lot when I was thinking about these things.