Chasing the Tail of the Gaussian (Part 1)

Posted by John Baez

I recently finished reading Robert Kanigel’s biography of Ramanujan, The Man Who Knew Infinity. I enjoyed it a lot and wanted to learn a bit more about what Ramanujan actually did, so I’ve started reading Hardy’s book Ramanujan: Twelve Lectures on Subjects Suggested By His Life and Work. And I decided it would be good to think about one of the simplest formulas in Ramanujan’s first letter to Hardy.

It was a good idea, because I now believe all these formulas, which look like impressive yet mute monuments, are actually crystallized summaries of long and interesting stories — full of twists, turns and digressions.

The number is a bit bigger than 3; the number is a bit less. So you should want to take their average and get another number close to 3. Our story starts with the geometric mean

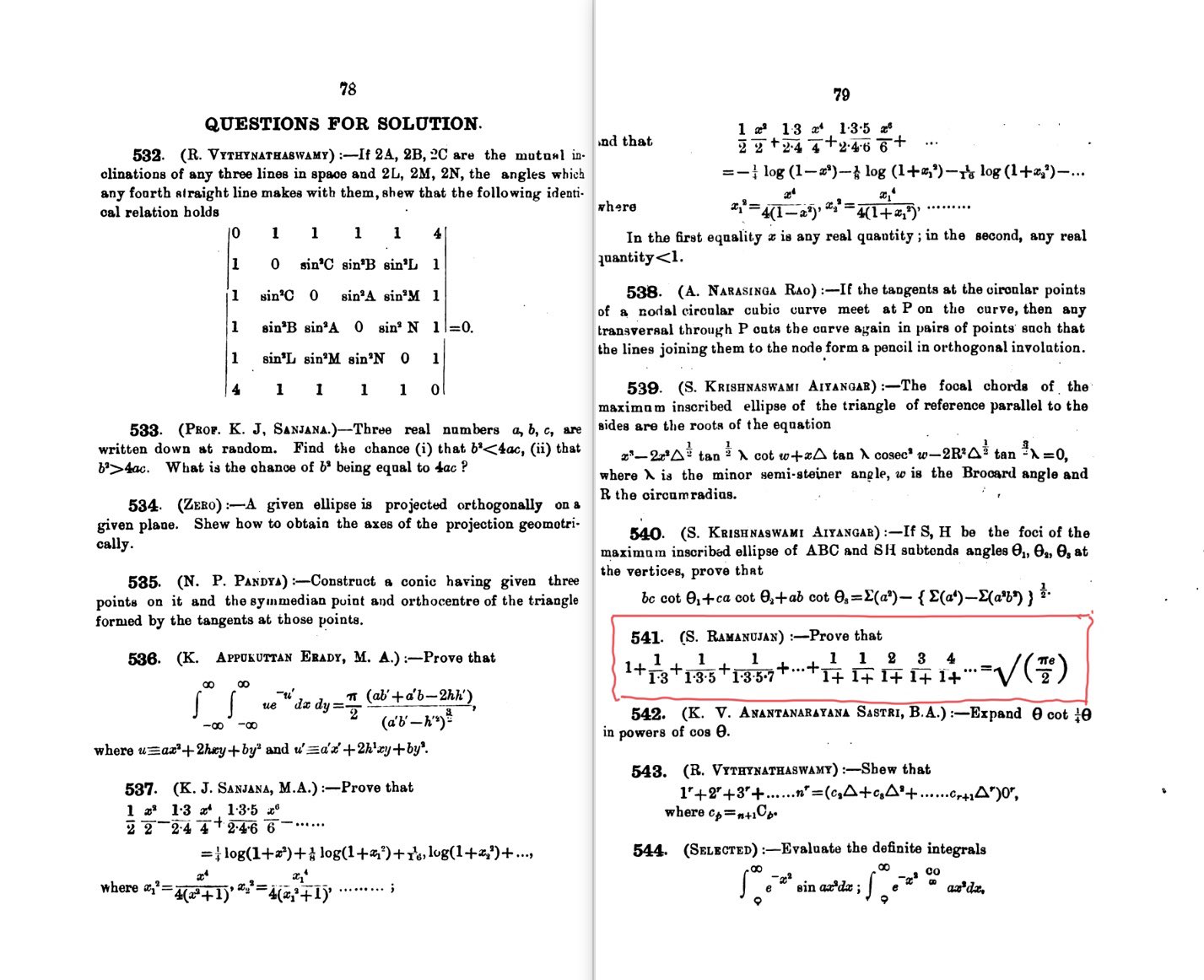

You may think it’s pointless to ruminate on this number. But in 1914, Ramanujan posed this puzzle in The Journal of the Indian Mathematical Society:

Prove that

My first reaction to this formula was a mixture of awe, terror, and disgust. Awe because it’s surprising and beautiful. Terror because I imagine it being on some final exam that mathematicians take before we’re allowed into the pearly gates. And disgust, because mathematics is supposed to make sense, and this doesn’t.

Getting past those initial reactions, note that on the left we have two of our favorite constants combined in a multiplicative way, while on the right we have two impressive expressions added to each other. So it’s hard to know where to start… but the expression

reminds me of a Taylor series, and I know more about those than continued fractions, so let’s start there.

There’s a power series

What function is this? If we knew, then we’d have a formula for

So let’s try to figure it out. We could try to guess a function that has this Taylor series, but another approach is to note that

looks a lot like . In fact

So let’s solve this differential equation! We need to use an integrating factor: we need to write as some function times a function that gets multiplied by when you differentiate it. The function has that property:

so if we write our mystery function as

then our differential equation becomes

or in other words

so

Hey! It’s the integral of a Gaussian! This is important in probability theory. So we’re not just messing around with insane formulas: we’re doing math that’s connected to something useful.

A famous fact is that you can’t do the integral of a Gaussian using elementary functions — but a lot is known about it: up to various fudge factors our integral is basically the error function. Anyway, we get

so now we get

and thus

We’ve evaluated our infinite series! And you’ll notice the square root of is showing up, which is nice. But not the square root of .

Where does this leave us? We were trying to show

and now we know the first big expression at right. So we just need to show

It’s very tempting to divide by , so let’s do that, and solve for the continued fraction:

So this is the equation we need to show.

Now what? It gets a bit harder now. But it helps to remember the integral of a Gaussian from to . When I was in high school, I read this anecdote:

Lord Kelvin wrote on the blackboard, and then said “A mathematician is one to whom that is as obvious as that twice two makes four is to you”.

It’s a somewhat obnoxious thing to say — maybe to a group of students? — but since I wanted to be a mathematician, I made sure I knew how how to do this integral!

Note that the square root of shows up in Kelvin’s integral, and we know that number appears in our problem here. So that’s a good sign. But these days I prefer to remember this:

because is more fundamental than , and is more fundamental than in many contexts, like the harmonic oscillator in physics (because when you differentiate it you get ), or probability theory (because gives the Gaussian of variance when you normalize it). So it’s easy for me to notice that

And this is a number you’ve just seen! — right before I said “So this is the equation we need to show.”

If we plug this formula into that equation, we get this:

or in other words this:

or if you prefer, this:

So this is what we need to show.

We’ve peeled off the soft outer layer of the problem, using just elementary techniques. Now it gets harder, but also more interesting. At this point it really pays to prove something more general, namely

In fact a formula essentially like this is the eighth one listed by Hardy in his first lecture on Ramanujan, where he’s discussing a few of the many formulas in the first letter he received from Ramanujan. Hardy said it “seemed vaguely familiar” when he first saw it, adding that “actually it is classical; it is a formula of Laplace first proved properly by Jacobi”. So, it was not one of really novel ones that wowed Hardy… which is why I’m talking about it: I’m not ready to tackle Ramanujan’s serious work.

I can guess why Laplace and Jacobi cared about this formula. Now we’re not just dealing with a single continued fraction that equals a specific number: we’re dealing with a continued fraction formula for the tail of a Gaussian in general!

That is, if IQs were distributed according to some Gaussian distribution of known mean and standard deviation, you might ask “what’s the probability that someone has an IQ over 200?” And you could use a formula like

to estimate this answer. Here I’ve got a Gaussian of standard deviation 1, and I’ve normalized it so we’re getting the probability that it takes a value .

I will not try to go further today; next time I’ll try to say more about the formula

A few final remarks:

1) I haven’t seen the papers by Laplace and Jacobi. If anyone can help me track them down, I’d appreciate it.

2) I just learned that Ramanujan’s first letter had at least 11 pages of formulas, at least two of which are now lost.

3) I got a lot of help from this blog article:

- A continued fraction for error function by Ramanujan, Paramanand’s Math Notes, June 21, 2014.

which in turn is largely based on this:

- Omran Kouba, Inequalities related to the error function.

So if you want to ‘cheat’ and read ahead, you can take a look at these. I may wind up needing your help.

(For Part 2, go here.)

Re: Chasing the Tail of the Gaussian (Part 1)

I think these might be what you are looking for:

P. S. Laplace, Traité de Mécanique Céleste vol IV, Livre X.i§5, direct link to page 493 in the Bowditch translation.

C. G. J. Jacobi, De fractione continua, in quam integrale evolvere licet, Journal f¼r die reine und angewandte Mathematik 12 (1834) 346–347, Göttinger Digitalisierungzentrum,

Though they are not in the precisely same form as you give.